This article is a terrific overview of developments in automated text analysis. My hope is that these technologies can open many doors to academic and non-academic research in digital archives.

Loon, Austin van. 2022. “Three Families of Automated Text Analysis.” Social Science Research 108 (November): 102798.

This page is a precis of this article with important definitions of functions that could be applied to ocr scanned digital archival materials.

A key concept: "text as data"

The idea of "text" has changed. This article introduces three major changes in the role of text in society that make it an important source of research information. Evidence, first, of the new role of text in society comes from two sources:

Poe, M. T. (2010). A History of Communications: Media and Society from the Evolution of Speech to the Internet. Cambridge University Press.

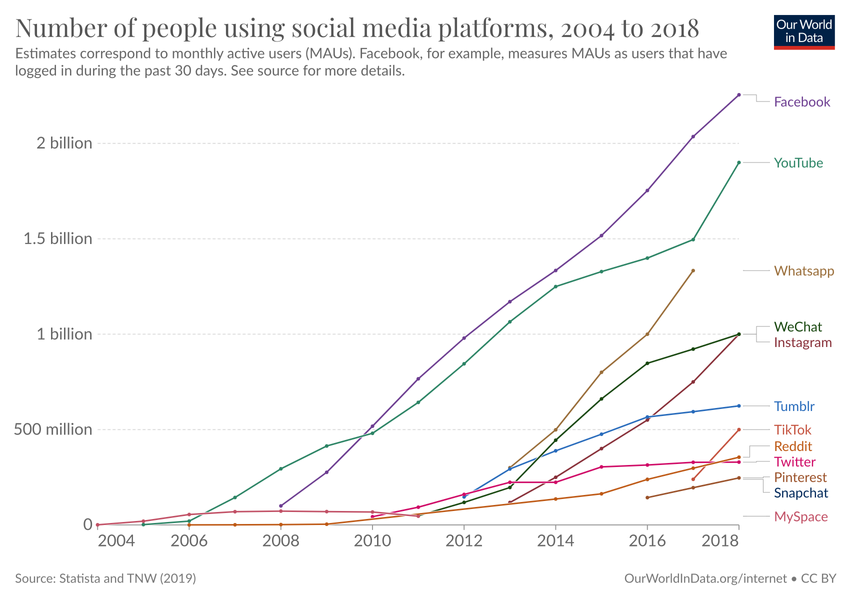

Evidence of the influence of social media.

Roser, M., & Ortiz-Ospina, E. (2016). Literacy. Our world in data. Our World in Data.

Evidence of the prevalence of text.

What are the advantages of automated text analysis?

- Natural language is more expressive and representative of thoughts and feelings than a Likert scale response.

- Digitally stored text exchanges are available in perfect fidelity. No reduction from the phenomenon being analyzed to the data available.

- Because text corpora are ubiquitous gives access to populations who might otherwise not participate. Judges, celebrities, CEOs.

- ATA grossly simplifies written language.

- Meaning is an elusive construct. (e.g. although the phrases “Mark Granovetter is smart” and “The author of ‘The Strength of Weak Ties’ possesses great intelligence” might mean the same thing for all intents and purposes, they actually have no words in common).

What are the three families of automated text analysis?

- term frequency analysis. This analysis represents text as observations that vary in how often certain strings of characters (e.g., words) appear.

- document structure analysis. This analysis assumes one can extract from word co-occurrence statistics what any given document is “about” (i.e., what the appropriate keywords or themes are) and represents text as observations that vary on this feature.

- semantic similarity analysis. This analysis attempts to quantify the meaning of strings of characters and represents texts as collections of such meanings.

Gentzkow, Matthew, Bryan Kelly, and Matt Taddy. 2019. “Text as Data.” Journal of Economic Literature 57 (3): 535–74.Grimmer, Justin, and Brandon M. Stewart. 2013. “Text as Data: The Promise and Pitfalls of Automatic Content Analysis Methods for Political Texts.” Political Analysis: An Annual Publication of the Methodology Section of the American Political Science Association 21 (3): 267–97.

What are some of the advantages of this more analytical typology over other, more practical ones?

- Features can be applied systematically across theoretical constructs.

- The features lens helps theories of social science keep up with innovations in computational linguistics.

- The systematic analysis of word choice in communication. This is measured in how often particular terms are used at scale.

- There of two categories of such methods: closed-vocabulary (a-priori sets of theoretical constructs, and open-vocabulary (inductive analysis of patterns some aspect of text).

- The key assumption, often backed by some validation exercise(s), is that the prevalence of one or a set of terms meaningfully corresponds to the theorized construct.

- Example: The "threat dictionary". Upticks of terms related to a set of terms indicates a cultural feeling of threat.

- Sample dictionaries:

- Masculine and feminine words

- Moral foundations dictionary

- Use of stereotypes, Github: https://github.com/gandalfnicolas/SADCAT

- Development process: a) develop "seed words," b) expand seed words using human judgement and word embeddings or WordNet, c) pruning of "too distant" terms, d) repeat.

- Demonstrating validity of a lexicon: 1) convergent validity to show lexical measures correlate with plausible events associated with their theoretical construct, or use words elicited in a survey, or examine contexts of terms in a corpus, 2) divergent validity show the lexical measure is uncorrelated with related but distinct theoretical constructs.

- Linguistic Inquiry and Word Count or LIWC. A general purpose dictionary.

- Definition: the analysis of term frequencies is entirely inductive, allowing for interesting relationships between term frequency and metadata to emerge from the corpus.

- Review of the approach:

Tam, Vivian, Nikunj Patel, Michelle Turcotte, Yohan Bossé, Guillaume Paré, and David Meyre. 2019. “Benefits and Limitations of Genome-Wide Association Studies.” Nature Reviews. Genetics 20 (8): 467–84.

What is a resource for differential language analysis?

Andrew Schwartz, H., Salvatore Giorgi, Maarten Sap, Patrick Crutchley, Johannes C. Eichstaedt, and Lyle Ungar. n.d. Differential Language Analysis ToolKit. Github. Accessed April 9, 2023. https://github.com/dlatk.

- Documents: specific tweets, policy platforms, and text messages

- Document structure analysis relies on the assumption that co-occurrence statistics, and therefore the boundaries of documents, are meaningful.

- Document structure analysis finds "patterns at the level of the document, seeking to estimate hidden patterns in the way words are distributed amongst them."

- Topics: "...can instead be thought of as the clusters of words whose combined presence meaningfully divide [or classify] the documents."

- Two approaches to theory building and testing: the "grounded theory" or inductive, vs. the "abductive approach"

- There are two dominant approaches to document structure analysis.

- The first is a set of methods which infer topics through Bayesian inference and are what are widely referred to as “topic models”.

- The second set of approaches treat the document-term matrix (or a transformation of it) as an adjacency matrix, which is then modeled as a network.

Bayesian approaches: In this approach, a “topic” is modeled as a multinomial distribution over all terms in the vocabulary. Some terms might be assigned higher probabilities than others.

Process: Start with a document and select words that represent topics. Then use the "bag of words" to represent the document-term matrix. Once a matrix is created the researcher can examine other documents.

Example: Given the hypothesis that college applicants from different socio-economic backgrounds write systematically different college admission essays, a researcher could use a correlated topic model to automatically categorize admissions essays. (p. 7)

Example 2: Create a word network from state of the union speeches by American presidents. Connect words if they occur in the same paragraph of the same address, identify clusters of words and what the clusters represent, and trace these over time to follow political consciousness.

No comments:

Post a Comment